|

...

POPULAR

MECHANICS 25 OCTOBER 2024 - MILITARIES ARE RUSHING TO REPLACE HUMAN SOLDIERS WITH AI-POWERED ROBOTS. THAT WILL BE DISASTROUS, EXPERTS WARN

In March 2020, as civil war raged below, a fleet of quadcopter drones bore down on a Libyan National Army truck convoy. The kamikaze drones, designed to detonate their explosive payloads against enemy targets, hunted down and destroyed several

trucks - trucks driven by human beings. Chillingly, the drones conducted the attack entirely on their

own - no humans gave the order to attack.

The rise of the armed robot, whether on land, sea, or in the air, has increasingly pushed humans away from the front lines, replacing them with armed robots. Humans still retain ultimate control over whether a robot can open fire on the battlefield, despite this potential disconnect. However, recent advances in artificial intelligence could sever the last link between man and machine. If that happens, the cold logic of AI robots would be the only factor that determines who lives and dies on the battlefield. Is this unsettling step inevitable, and will real humans be anywhere near the front line in the next war?

Today’s military drones allow human personnel to carry out vital missions, often from tens to even thousands of miles away. An iconic example involves airmen sitting in air conditioned, virtual cockpits at Creech Air Force Base in Nevada while controlling armed MQ-9 Reaper drones half a world away. The rise of artificial intelligence allows these drones to be more

autonomous - capable of making limited decisions about navigation and route-planning. We live in an age when AI-powered self-driving cars read signs and drive, making critical decisions that could injure or kill the humans around them. Giving robots the ability to make life or death decisions on the battlefield doesn’t really seem all that far behind.

“At some point,” Samuel Bendett, Adjunct Senior Fellow at the Center for New American Security, tells Popular Mechanics, “military autonomy will become cost effective enough to be fielded in large numbers. At that point, it may be very difficult for humans to attempt to defend against such robotic systems attacking across multiple

domains - land, air, sea, cyber, and information - prompting the defenders to utilize their own autonomy to make sense of the battlefield and make the right decision to use the right systems. Humans will still be essential, but some key battlefield functions may have to pass to non-human agents.”

A REMARKABLY CONSISTENT RULE OF WARFARE is that as weapons become more advanced, humans grow ever more removed from the act of killing. Early humans were forced to fight other humans with handheld weapons such as clubs, stones, and knives, participating in combat at the same intimate level of danger as the person they were fighting. Warfare required great physical courage, the need to put your life in danger to take someone else’s.

Over the course of thousands of years weaponry evolved, enabling humans to kill each other at increasing distances. The bow and arrow, and later, the invention of gunpowder muskets, rifles and cannon, meant that humans could kill their enemies often from positions of relative safety, like behind a tree or from inside a building. The invention of the

internal combustion

engine, rocket motor, nuclear weapons and the integrated circuit supercharged remote killing, leading to weapons that can kill thousands of miles away with the push of a button. Thanks to technology, that primal level of physical courage is no longer necessary.

Advances in weaponry disrupted warfare time and time again, granting huge advantages to the country that adopted them first. Artificial intelligence is not only rapidly entering the civilian sector but also the military one. Ships, aircraft and ground vehicles can operate on their own. Robotic drones like the MQ-4 Triton spy on adversary forces, robotic ground vehicles carry equipment for soldiers, and robotic helicopters

re-supply friendly forces across great distances.

There is one exception, however, to the actions autonomous military drones can take: they must wait for explicit permission from a human controller before opening fire. The consensus among today’s armies is that a human must remain in the decision-making loop. A human must study the available imagery, radar, and any other evidence that a target is legitimate and hostile, or order the drone to stand down if needed. The human being may be the only thing that stands between blurry sensors, buggy software, faulty AI logic, other unforeseen

factors - and tragedy.

However, that human firewall may not last. A truism of combat is that whoever shoots first wins, and having a drone wait while a human makes a decision can cede the initiative to the enemy. Warfare at its core is a

competition - one with dire consequences for the losers. This makes walking away from any advantage difficult.

ORIGINAL

FICTION - John Storm has enhanced DNA, making him more then four times

stronger than ordinary humans. He also has a brain implant that

supercharges his thinking ability, with AI search and the ability to hack

into security systems. Lastly, he wears a kind of body armour that is more

advanced than any ordinary bullet proof suit. With the ability to blend

into the background, to make him a harder target. None of these features

will find their way to ordinary infantry soldiers, because they are too

expensive. Where an Army is only as good as it's budget. And in Europe and

the USA, military spending is suffering because of economic national debts

from poor government performance, including overstaffing of civil

servants, councils and MPs, in a ratio that is not sustainable, being more

managers than workers to support them. And that means a budget black hole,

with ever more deficits and a bigger black hole.

As of October 2024, in the UK the £22bn black hole that Rachel Reeves

is claiming has been created not by too little money coming in, but by too much going out. The latest figures show that current spending has increased to £510.6bn so far

the 2024 financial year, which has led to the Government borrowing £79.6bn since April.

Worryingly for the Chancellor, that is significantly more than the £73bn the Office for Budget Responsibility predicted back in March, at the time of the last Conservative Budget. The ONS pinned the blame largely on rising public sector pay costs, as the total wage bill for central Government hit a fresh monthly high of £17.8bn in September. That is alongside a rise in public sector headcount, which stood at 5.94m in June.

This means it is unlikely the Government will be able to reduce its £2.8 trillion debt pile any time soon, which already serves as a huge burden in terms of finance costs.

The recent spike in interest rates meant the Government’s spending on interest rates hit £5.3bn in September, up from £4.2bn a year ago and £3.3bn 12 months before that. This means a staggering 7.1pc of government revenues are spent on debt interest payments alone.

As with the military, governments might want to consider slashing

overstaffing in the public sector, by replacing human staff with AI

algorithms, that will not only be more efficient, but also reduce the

growing Pensions liability.

THE MARCH 2020 DRONE ATTACK MAY WELL BE A SIGN OF THINGS TO COME, Bendett explains, “In

Russia, the military is on record going back almost a decade discussing mass-scale applications of robotic and autonomous systems to remove soldiers from dangerous combat and to overwhelm adversary defenses, making it easier to conduct follow-on strikes.”

Drone versus drone warfare, Bendett says, has already taken place. “In one key engagement in March 2024, Russian combat unmanned ground vehicles advanced on Ukrainian positions as part of an assault, only to be targeted and destroyed by Ukrainian first-person-view drones. In this engagement, both sides’ systems were controlled by human operators.”

The crucible of high intensity warfare in Ukraine is pushing a greater reliance on autonomous weapons. Ukraine’s army suffers from an acute manpower shortage and has leaned on drones whenever possible. “In Ukraine,” Bendett explains, “volunteers working on domestic drone development have said on multiple occasions that robots should fight first, followed by humans.”

Experts believe the “man in the loop” is indispensable, now and for the foreseeable future, as a means of avoiding tragedy, says Zach Kallenborn, an expert on killer robots, weapons of mass destruction, and drone swarms with the Schar School of Policy and Government. “Current machine vision systems are prone to making unpredictable and easy mistakes.”

Mistakes could have major implications, such as spiraling a conflict out of control, causing accidental deaths and escalation of violence. “Imagine the autonomous weapon shoots a soldier not party to the conflict. The soldier’s death might draw his or her country into the conflict,” Kallenborn says. Or the autonomous weapon may cause an unintentional level of harm, especially if autonomous nuclear weapons are involved, he adds.

Some battlefields do benefit from greater AI autonomy when there are fewer opportunities to harm noncombatants. “An autonomous weapon protecting a ship at sea from incoming missiles, aircraft and drones is really not a huge risk. It’s unlikely a ton of civilian aircraft would be flying in the middle of a naval battle in the middle of the ocean,” Kallenborn explains.

While physical courage may not be necessary to take lives, Kallenborn notes that the human factor retains one last form of courage in the act of killing: moral courage. That humans should have ultimate responsibility for taking a life is an old argument. “During the Civil War folks objected to the use of landmines because it was a dishonorable way of waging war. If you’re going to kill a man, have the decency to pull the trigger yourself.” Removing the human component leaves only the cold logic of an artificial intelligence…and whatever errors may be hidden in that programmed logic.

If autonomous weapons authorized to open fire on humans is an inevitable future, as some armies and experts think it is, will AI ever become as proficient as humans in discerning enemy combatants from innocent bystanders? Will the armies of the future simply accept civilian casualties as the price of a quicker end to the war? These questions remain unanswered for now. And humanity may not have much time to wrestle with these questions before the future arrives by force.

It's

a game of chess, where AI chess games are already pretty good. Take humans

out of the equation and the smartest strategist wins, along with the team

with the best robots. It is Robot Wars. Except, that military contractors

like big ships and submarines, tanks, and nuclear missiles. It should be

underground bunkers and drones sentries to deter thermonuclear war and

invasion across borders. Or, building up navies, that drones can take out

in days.

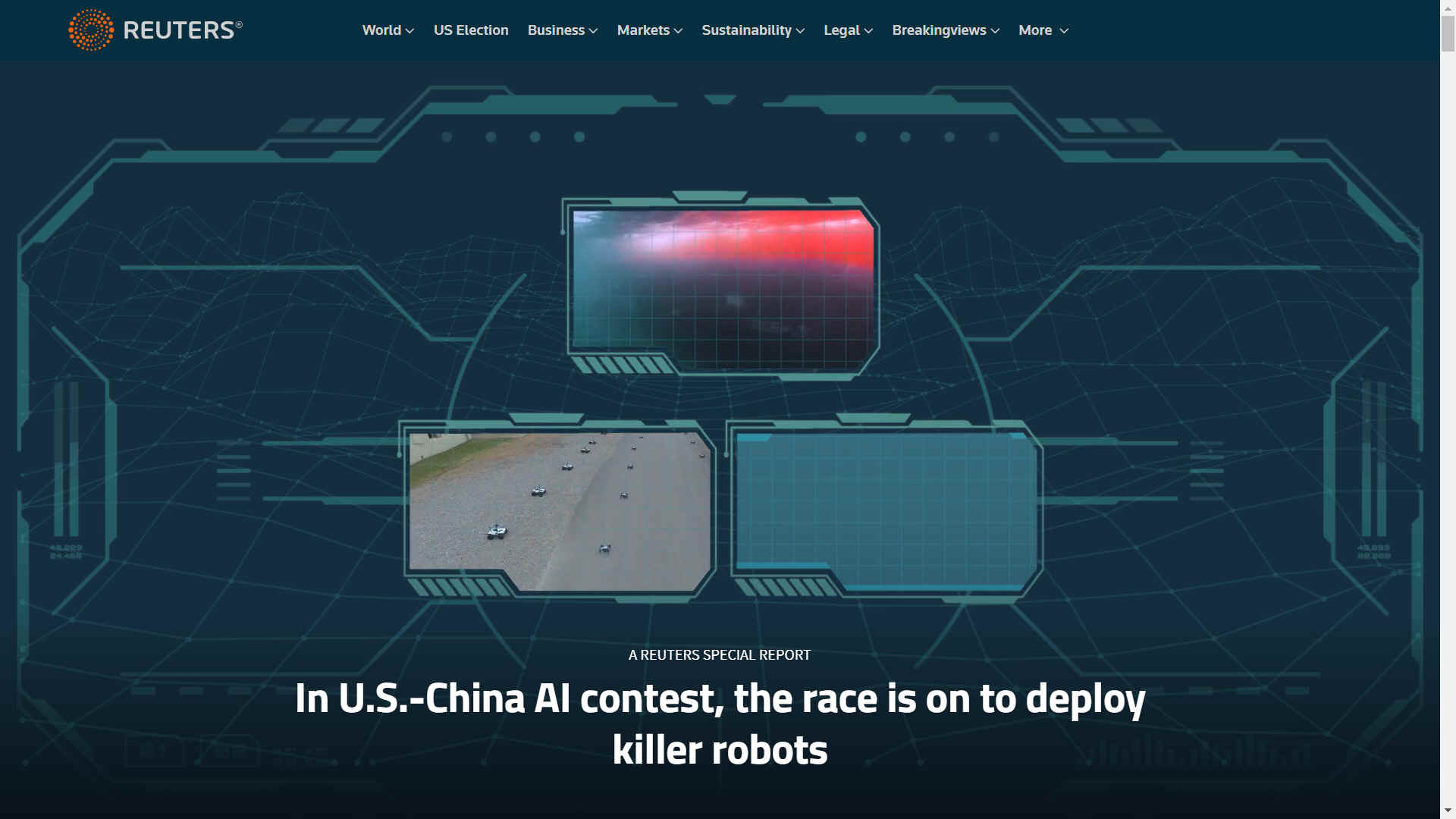

REUTERS

8 SEPTEMBER 2023

To meet the challenge of a rising China, the Australian Navy is taking two very different deep dives into advanced submarine technology.

One is pricey and slow: For a new force of up to 13 nuclear-powered attack submarines, the Australian taxpayer will fork out an average of more than AUD$28 billion ($18 billion) apiece. And the last of the subs won’t arrive until well past the middle of the century.

[What a farce, as wasted MOD and taxpayers money.

Not to mention leaving the democratic free world open to attacks.]

The other is cheap and fast: launching three unmanned subs, powered by artificial intelligence, called Ghost Sharks. The navy will spend just over AUD$23 million each for them – less than a tenth of 1% of the cost of each nuclear sub Australia will get. And the Ghost Sharks will be delivered by mid-2025.

The two vessels differ starkly in complexity, capability and dimension. The uncrewed Ghost Shark is the size of a school bus, while the first of Australia’s nuclear subs will be about the length of a football field with a crew of 132. But the vast gulf in their cost and delivery speed reveal how automation powered by artificial intelligence is poised to revolutionize weapons, warfare and military power – and shape the escalating rivalry between China and the United States. Australia, one of America’s closest allies, could have dozens of lethal autonomous robots patrolling the ocean depths years before its first

nuclear submarine goes on patrol.

Without the need to cocoon a crew, the design, manufacture and performance of submarines is radically transformed, says Shane Arnott. He is the senior vice-president of engineering at U.S. defense contractor Anduril, whose Australian subsidiary is building the Ghost Shark subs for the Australian Navy.

“A huge amount of the expense and systems go into supporting the humans,” Arnott said in an interview in the company’s Sydney office.

Take away the people, and submarines become much easier and cheaper to build. For starters, Ghost

Shark has no pressure hull – the typically tubular, high-strength steel vessel that protects a submarine's crew and sensitive components from the immense force that water exerts at depth. Water flows freely through the Ghost

Shark structure. That means Anduril can build lots of them, and fast.

Rapid production is the company’s plan. Arnott declined to say, though, how many Ghost Sharks Anduril intends to manufacture if it wins further Australian orders. But it is designing a factory to build “at scale,” he said. Anduril is also aiming to build this type of sub for the United States and its allies, including Britain,

Japan, Singapore,

South Korea and customers in

Europe, the company told Reuters.

A need for speed is driving the project. Arnott points to an Australian government strategic assessment, the Defense Strategic Review, published in April, which found the country was entering a perilous period where “China's military build-up is now the largest and most ambitious of any country since the end of the

Second World

War.” A crisis could emerge with little or no warning, the review said.

“We can’t wait five to 10 years, or decades, to get stuff,” said Arnott. “The timeline is running out.”

This report is based on interviews with more than 20 former American and Australian military officers and security officials, reviews of AI research papers and Chinese military publications, as well as information from defense equipment exhibitions.

An intensifying military-technology arms race is heightening the sense of urgency. On one side are the United States and its allies, who want to preserve a world order long shaped by America’s economic and military dominance. On the other is China, which rankles at U.S. ascendancy in the region and is challenging America’s military dominance in the Asia-Pacific. Ukraine’s innovative use of technologies to resist Russia’s invasion is heating up this competition.

In this high-tech contest, seizing the upper hand across fields including AI and autonomous weapons, like Ghost Shark, could determine who comes out on top.

“Winning the software battle in this strategic competition is vital,” said Mick Ryan, a recently retired Australian army major general who studies the role of technology on warfare and has visited Ukraine during the war. “It governs everything from weather prediction, climate change models, and testing new-era nuclear weapons to developing exotic new weapons and materials that can provide a leap-ahead capability on the battlefield and beyond.”

If China wins out, it will be well placed to reshape the global political and economic order, by force if necessary, according to technology and military experts.

Most Americans alive today have only known a world in which the United States was the single true military superpower, according to a May report, Offset-X, from the Special Competitive Studies Project, a non-partisan U.S. panel of experts headed by former Google Chairman Eric Schmidt. The report outlines a strategy for America to gain and maintain dominance over China in military technology.

If America fails to act, it “could see a shift in the balance of power globally, and a direct threat to the peace and stability that the United States has underwritten for nearly 80 years in the Indo-Pacific,” the report said. “This is not about the anxiety of no longer being the dominant power in the world; it is about the risks of living in a world in which the Chinese Communist Party becomes the dominant power.”

The stakes are also high for Beijing. If the U.S. alliance prevails, it will make it far harder for the People’s Liberation Army, or PLA, as the Chinese military is known, to seize democratically governed Taiwan, control the shipping lanes of East Asia and dominate its neighbors. Beijing sees

Taiwan is an inalienable part of China and hasn’t ruled out the use of force to subdue it.

The Department of Defense had no comment “on this particular report,” a

Pentagon spokesperson said in response to questions. But the department’s leadership, the spokesperson added, has been “very clear” regarding China as “our pacing challenge.” Regarding a possible attack on Taiwan, the spokesperson said, Defense Secretary Lloyd Austin and other senior leaders “have been very clear that we do not believe an invasion is imminent or inevitable, because deterrence today is real and strong.”

China’s defense ministry and foreign ministry didn’t respond to questions for this article.

A spokesperson for Australia’s Department of Defence said it was a priority to “translate disruptive new technologies into Australian Defence Force capability.” The department is investigating, among other things, “autonomous undersea warfare capabilities to complement its crewed

submarines and surface fleet, and enhance their lethality and survivability,” the spokesperson said.

KILLER ROBOTS

Some leading military strategists say AI will herald a turning point in military power as dramatic as the introduction of nuclear weapons. Others warn of profound dangersif AI-driven robots begin making lethal decisions independently, and have called for a pause in AI research until agreement is reached on regulation related to the military application of AI.

Despite such misgivings, both sides are scrambling to field uncrewed machines that will exploit AI to operate autonomously: subs, warships, fighter jets, swarming aerial drones and ground combat vehicles. These programs amount to the development of killer robots to fight in tandem with human decision makers. Such robots – some designed to operate in teams with conventional ships, aircraft and ground troops – already have the potential to deliver sharp increases in firepower and change how battles are fought, according to military analysts.

Some, like Ghost Shark, are able to perform maneuvers no conventional military vehicle could survive – like diving thousands of meters below the ocean surface.

Perhaps even more revolutionary than autonomous weapons is the potential for AI systems to inform military commanders and help them decide how to fight – by absorbing and analyzing the vast quantities of data gathered from satellites, radars, sonar networks, signals intelligence and online traffic. Technologists say this information has grown so voluminous it is impossible for human analysts to digest. AI systems trained to crunch this data could deliver commanders with better and faster understanding of a battlefield and provide a range of options for military operations.

Conflict may also be on the verge of turning very personal. The capacity of AI systems to analyze surveillance imagery, medical records, social media behavior and even online shopping habits will allow for what technologists call “micro-targeting” – attacks with drones or precision weapons on key combatants or commanders, even if they are nowhere near the front lines. Kiev’s successful targeting of senior Russian military leaders in the Ukraine conflict is an early example.

AI could also be used to target non-combatants. Scientists have warned that swarms of small, lethal drones could target big groups of people, such as the entire

population of military-aged males from a certain town, region or ethnic group.

“They could wipe out, say, all males between 12 and 60 in a city,” said computer scientist Stuart Russell in a

BBC lecture on the role of AI in warfare broadcast in late 2021. “Unlike nuclear weapons, they leave no radioactive crater, and they keep all the valuable physical assets intact,” added Russell, a professor of computer science at the University of

California, Berkeley.

The United States and China have both tested swarms of AI-powered drones. Last year, the U.S. military released footage of troops training with drone swarms. Another video shows personnel at Fort Campbell, Tennessee, testing swarms of drones in late 2021. The footage shows a man wearing video game-like goggles during the experiment.

For the U.S. alliance, swarms of cheap drones could offset China’s numerical advantage in missiles, warships and strike aircraft. This could become critical if the United States intervened against an assault by Beijing on Taiwan.

America will field “multiple thousands” of autonomous, unmanned systems within the next two years in a bid to offset China's advantage in numbers of weapons and people, the U.S. Deputy Secretary of Defense, Kathleen Hicks, said in an August 28 speech. “We’ll counter the PLA’s mass with mass of our own, but ours will be harder to plan for, harder to hit, harder to beat,” she said.

Even drones with limited AI capability can have an impact. Miniature, remote-controlled surveillance drones with some autonomy are already in service. One example is the pocket-sized Black Hornet 3 now being deployed by multiple militaries.

This drone can fit in the palm of a hand and is hard to detect, according to the website of Teledyne FLIR, the company that makes them. It is reminiscent of the movie “Eye in the Sky,” in which a bug-like drone is used against militants in

Kenya. Weighing less than 33 grams, or a bit more than an ounce, it can fly almost silently for 25 minutes, sending back video and high-definition still images to its operator. It gives soldiers in the field a real-time understanding of what is happening around them, according to the company.

CHEAP AND EXPENDABLE

The AI military sector is dominated by software, an industry where change comes fast.

Anduril, maker of the AI-powered Ghost Shark, is trying to capitalize on the desire of the U.S. alliance to quickly team humans with intelligent machines. The company, which shares its name with a fictional sword in Tolkien’s “Lord of the Rings” saga, was founded in 2017 by Palmer Luckey, designer of the Oculus virtual reality headset, now owned by Facebook. Luckey sold Oculus VR to the social media giant for $2.3 billion in 2014.

Arnott, the Anduril engineer working on Ghost Shark, said the company is also supplying equipment to Ukraine. The Russians rapidly adapted to this gear deployed in battle, so Anduril has been pushing out regular updates to maintain an advantage.

“Something happens,” he said. “We get punched in the face. The customer gets hit with something, and we are able to take that, turn it around and push out a new feature.”

Arnott didn’t provide details of the equipment, but Anduril referred Reuters to a February announcement from the

Biden administration that included the company’s ALTIUS 600 munition drone in a package of military aid to Ukraine. This drone can be deployed for intelligence, surveillance and reconnaissance. It can also be used as a kamikaze

drone, armed with an explosive warhead that can fly into enemy targets.

Ukraine has already reportedly used drone surface craft packed with explosives to attack Russian shipping. Military commentators have suggested that Taiwan could use similar tactics to resist a Chinese invasion, launching big numbers of these vessels into the path of the fleet heading for its beaches.

Asked by Reuters about Taiwan’s drone program, the office of President Tsai Ing-wen said in June that the island had drawn “great inspiration” from Ukraine’s use of drones in its war with Russia.

China, the United States and U.S. allies have programs to build fleets of stealthy drone fighters that will fly in formation with crewed aircraft. The drones could peel off to attack targets, jam communications or fly ahead so their radars and other sensors could provide early warning or find targets. These robots could instantly share information with each other and human operators, according to military technology specialists.

America is planning to build a 1,000-strong fleet of these fighter drones, U.S. Air Force Secretary Frank Kendall told a warfare conference in Colorado in March. At the Zhuhai air show in November, China unveiled a jet fighter-like drone, the FH-97A, which will operate with a high degree of autonomy alongside manned combat aircraft, providing intelligence and added firepower, according to reports in the Chinese state-controlled media. China, the United States and Japan are also building large, uncrewed submarines similar to Australia’s Ghost Shark.

One overwhelming advantage of these autonomous weapons: Commanders can deploy them in big numbers without risking the lives of human crews. In some respects, performance improves, too.

Jet-powered robot fighters, for instance, could perform maneuvers the human body wouldn’t tolerate. This would include tight turns involving extreme G-forces, which can cause pilots to pass out. Aerial drones can also do away with the pressurized cockpits, oxygen supplies and ejector seats required to support a human pilot.

And robots don’t get tired. As long as they have power or fuel, they can carry on their missions indefinitely.

Because many robots are relatively cheap – a few million dollars for an advanced fighter drone, versus tens of millions for a piloted fighter jet – losses could be more readily absorbed. For commanders, that means more risk might become acceptable. A robot scout vehicle could approach an enemy ground position to send back high-definition images of defenses and obstacles, even if it is subsequently destroyed, according to Western military experts.

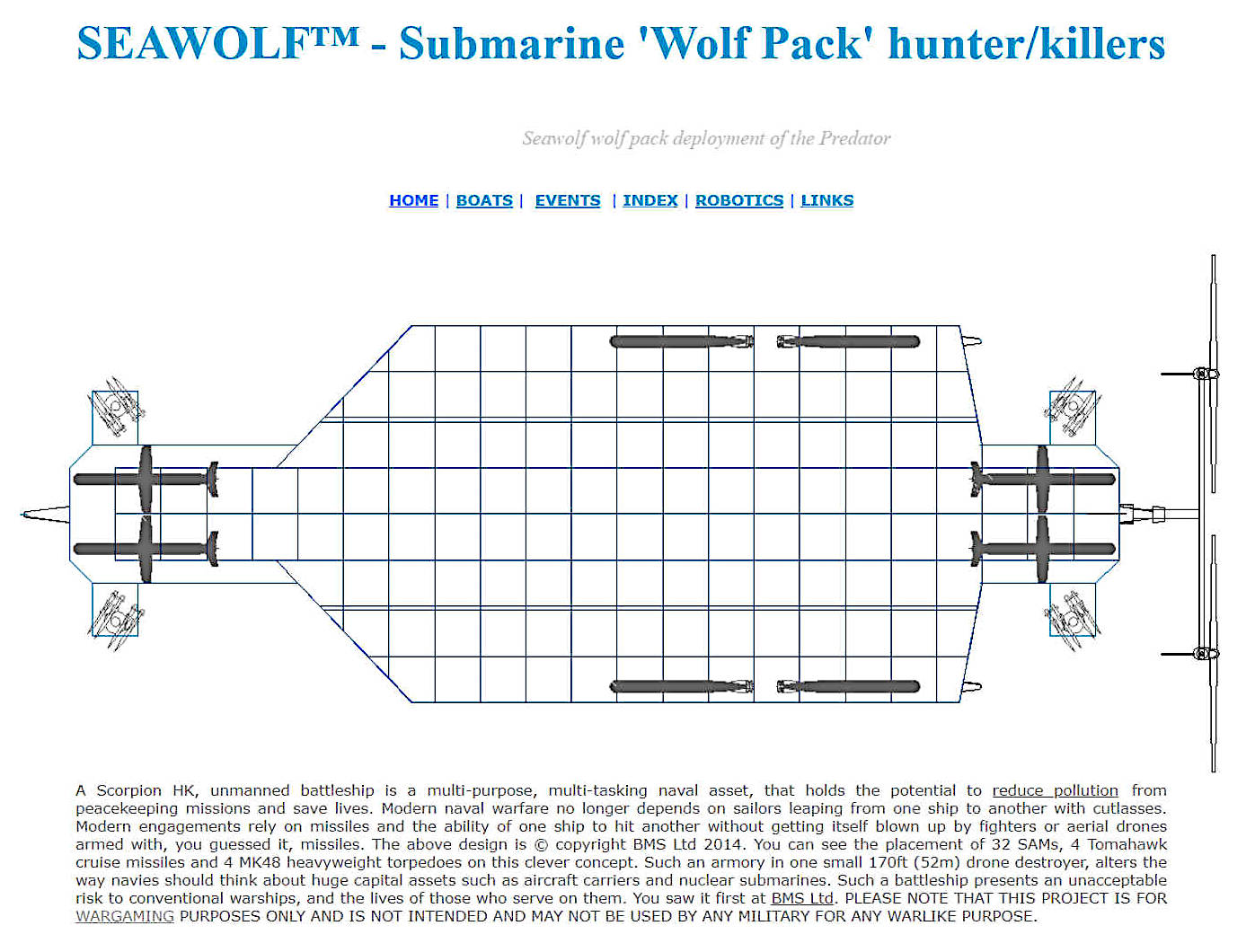

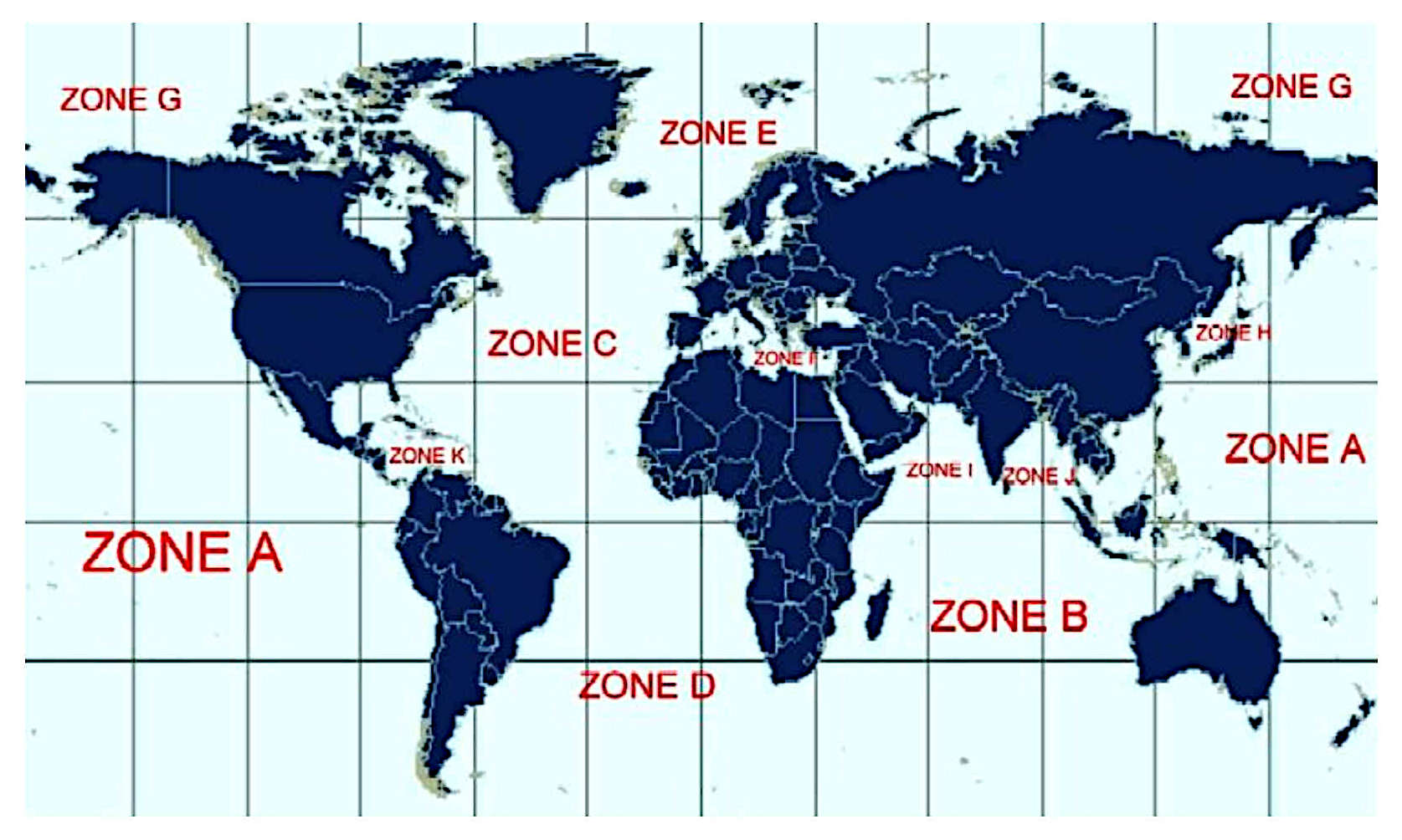

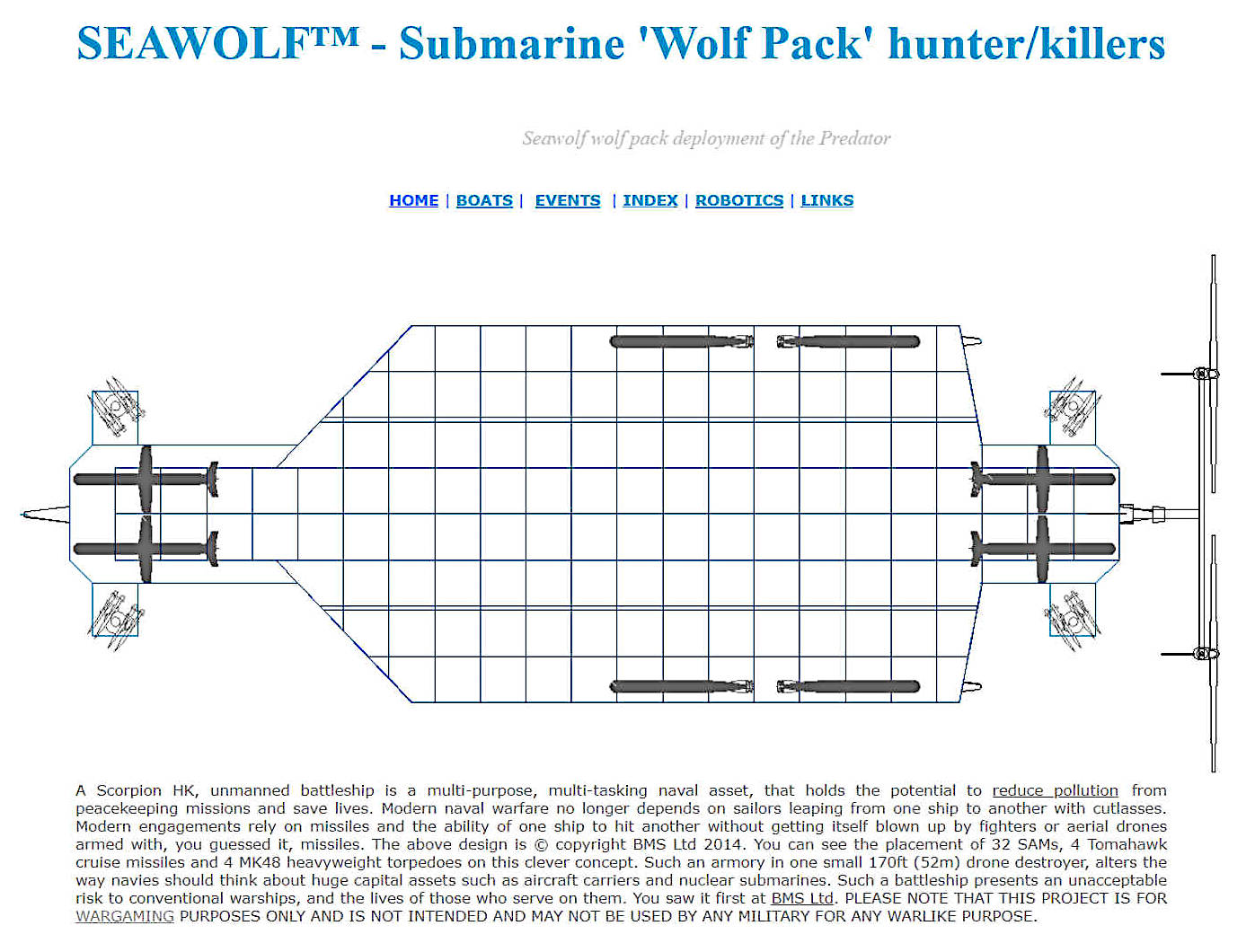

MOVING THE GOALPOSTS - This is an example of how to reduce the cost of naval operations by replacing conventional aircraft carriers and submarines with robot warships. The next logical upgrade will be to include cruise missile launch capability in an adapted Wolverine ZCC™ warship. This addition will compliment surface to air missiles and torpedoes with intermediate range nuclear weapons, some of which, such as the RK-55s have a phenomenal range and payload.

Development of the system could have been by improvements to existing (off the shelf) weapon formats, to make them smarter. Each Wolverine ZCC (WZCC) will work with its neighboring WZCCs to protect each other. Attack one WZZC and you take them all on, like a swarm of angry hornets. One hornet maybe, but if ten hornets all attack from different angles, you are going to get stung. Likewise, the new Gerald R Ford aircraft carrier might survive an attack by a lone WZCC, but will stand no chance from an attack by a swarm of WZCCs.

Remember, this was proposed for development in 2013. If that had taken

place, China may have realized that their ordinary naval ambitions would

come to naught. And perhaps not squandered their GDP on a floating armada

of scrap metal - all bar the shouting.

WHO IS WINNING?

So far, it is difficult to say who is winning the battle to master AI-powered weapons.

China’s huge and sophisticated manufacturing sector gives it advantages in mass production. America remains home to most of the world’s dominant and most innovative technology and software companies. But tight secrecy surrounds the projects on both sides.

Beijing does not publish any detailed breakdown of its increasing defense spending, including outlays on AI. Still, the available disclosures of spending on AI military research do show that outlays on AI and machine learning grew sharply in the decade from 2010.

In 2011, the Chinese government spent about $3.1 million on unclassified AI research at Chinese universities and $8.5 million on machine learning, according to Datenna, a Netherlands-based private research company specializing in open source intelligence on China's industrial and technology sectors. By 2019, AI spending was about $86 million and outlays on machine learning were about $55 million, Datenna said.

“The biggest challenge is we don’t really know how good the Chinese are, particularly when it comes to the military applications of AI,” said Martijn Rasser, a former analyst with the U.S.

Central Intelligence Agency and now managing director of Datenna. “Obviously, China is producing world class research, but what the PLA and PLA-affiliated research institutions are doing specifically is much more difficult to discern.”

The July 1 death in a traffic accident in Beijing of a leading Chinese military AI expert provides a small window into the country’s ambitions.

At the time he died, Colonel Feng Yanghe, 38, was working on a “major task,” state-controlled China Daily reported, without going into detail. Feng had studied at the Department of Statistics at Harvard University, the report said.

In China, he headed a team that developed an AI system called “War Skull,” which China Daily said could “draft operation plans, conduct risk assessments and provide backup plans in advance based on incomplete tactical data.” The system had been used in exercises by the PLA, the report said.

The Biden Administration is so concerned about the tech race that it has moved to block China’s drive to conquer AI and other advanced technologies. Last month, Biden signed an executive order that will prohibit some new U.S. investment in China in sensitive technologies that could be used to bolster military capacity.

Anduril, the weaponry start-up created by VR-headset pioneer Palmer Luckey, has ambitions to be a major high-tech defense contractor. The Costa-Mesa, California-based company now employs more than 1,800 staff in the United States, the United Kingdom and Australia. Luckey’s biography on the company website says he formed Anduril to “radically transform the defense capabilities of the United States and its allies by fusing artificial intelligence with the latest hardware developments.”

Anduril said Luckey was unavailable to be interviewed for this story.

The core of Anduril’s business is its Lattice operating system, which combines technologies including sensor fusion, computer vision, edge computing and AI. The Lattice system drives the autonomous operation of hardware that the company supplies, including aerial drones, anti-drone systems and submarines such as Ghost Shark.

In its biggest commercial success so far, Anduril early last year won a contract worth almost $1 billion to supply U.S. Special Operations Command with a counter-drone system. The U.K. Ministry of Defense has also awarded the company a contract for a base defense system.

Arnott wouldn’t describe the capabilities of Ghost Shark. The vessels will be built at a secret plant on Sydney Harbour in close collaboration with the Australian Navy and defense scientists. “We absolutely can’t talk about any of the applications of this,” he said.

But a smaller, three-tonne autonomous submarine in Anduril’s product line-up, the Dive-LD, suggests what unmanned AI-powered subs can do. The Dive-LD can reach depths of 6,000 meters and operate autonomously for 10 days, according to the company website. The sub, which has a 3D-printed exterior, is capable of engaging in mine counter-warfare and anti-submarine warfare, the site says.

With no need for a pressure hull, Anduril's Dive-LD can descend far deeper than the manned submarines in military service. The maximum depths reachable by military subs is usually classified information, but naval analysts told Reuters it is somewhere between 300 and 900 meters. The ability to descend to much greater depths can make a sub tougher to detect and attack.

Veteran navy officers say dozens of autonomous submarines like Ghost Shark, armed with a mix of torpedoes, missiles and mines, could lurk off an enemy’s coast or lie in wait at a strategically important waterway or chokepoint. They could also be assigned to strike at targets their AI-powered operating systems have been taught to recognize.

Australia’s nuclear subsea fleet will be more formidable than the unmanned submarines of today. But, they will also take much longer to materialize.

In the first part of the project, the United States will supply up to five

Virginia-class submarines to Canberra. The first of those subs will not enter service until early next decade. A further eight of a new class of subs will then be built starting from the 2040s, as part of the same AUD$368 billion project, under the AUKUS agreement, a defense-technology collaboration between

Australia, Britain and the United States.

By the time this fleet is an effective force, big numbers of lethal robots operating in teams with human troops and traditional crewed weapons may have changed the nature of war, military strategists say.

“There is a lot of warfare that is dull, dirty and dangerous,” said Arnott. “It is a lot better to do that with a machine.”

HUMAN-MACHINE TEAMS ARE ABOUT TO RESHAPE WARFARE

Some technology experts believe innovative commercial software developers now entering the arms market are challenging the dominance of the traditional defense industry, which produces big-ticket weapons, sometimes at glacial speed.

It is too early to say if big, human-crewed weapons like submarines or reconnaissance helicopters will go the way of the battleship, which was rendered obsolete with the rise of air power. But aerial, land and underwater

robots, teamed with humans, are poised to play a major role in warfare.

Evidence of such change is already emerging from the war in Ukraine. There, even rudimentary teams of humans and machines operating without significant artificial-intelligence powered autonomy are reshaping the battlefield. Simple, remotely piloted drones have greatly improved the lethality of artillery, rockets and missiles in Ukraine, according to military analysts who study the conflict.

Kathleen Hicks, the U.S. deputy secretary of defense, said in an Aug. 28 speech at a conference on military technology in Washington that traditional military capabilities “remain essential.” But she noted that the Ukraine conflict has shown that emerging technology developed by commercial and non-traditional companies could be “decisive in defending against modern military aggression.”

Both Russian and Ukrainian forces are integrating traditional weapons with AI, satellite imaging and communications, as well as smart and loitering munitions, according to a May report from the Special Competitive Studies Project, a non-partisan U.S. panel of experts. The battlefield is now a patchwork of deep trenches and bunkers where troops have been “forced to go underground or huddle in cellars to survive,” the report said.

Some military strategists have noted that in this conflict, attack and transport helicopters have become so vulnerable that they have been almost forced from the skies, their roles now increasingly handed over to drones.

“Uncrewed aerial systems have already taken crewed reconnaissance helicopters out of a lot of their missions,” said Mick Ryan, a former Australian army major general who publishes regular commentaries on the conflict. “We are starting to see ground-based artillery observers replaced by drones. So, we are already starting to see some replacement.”

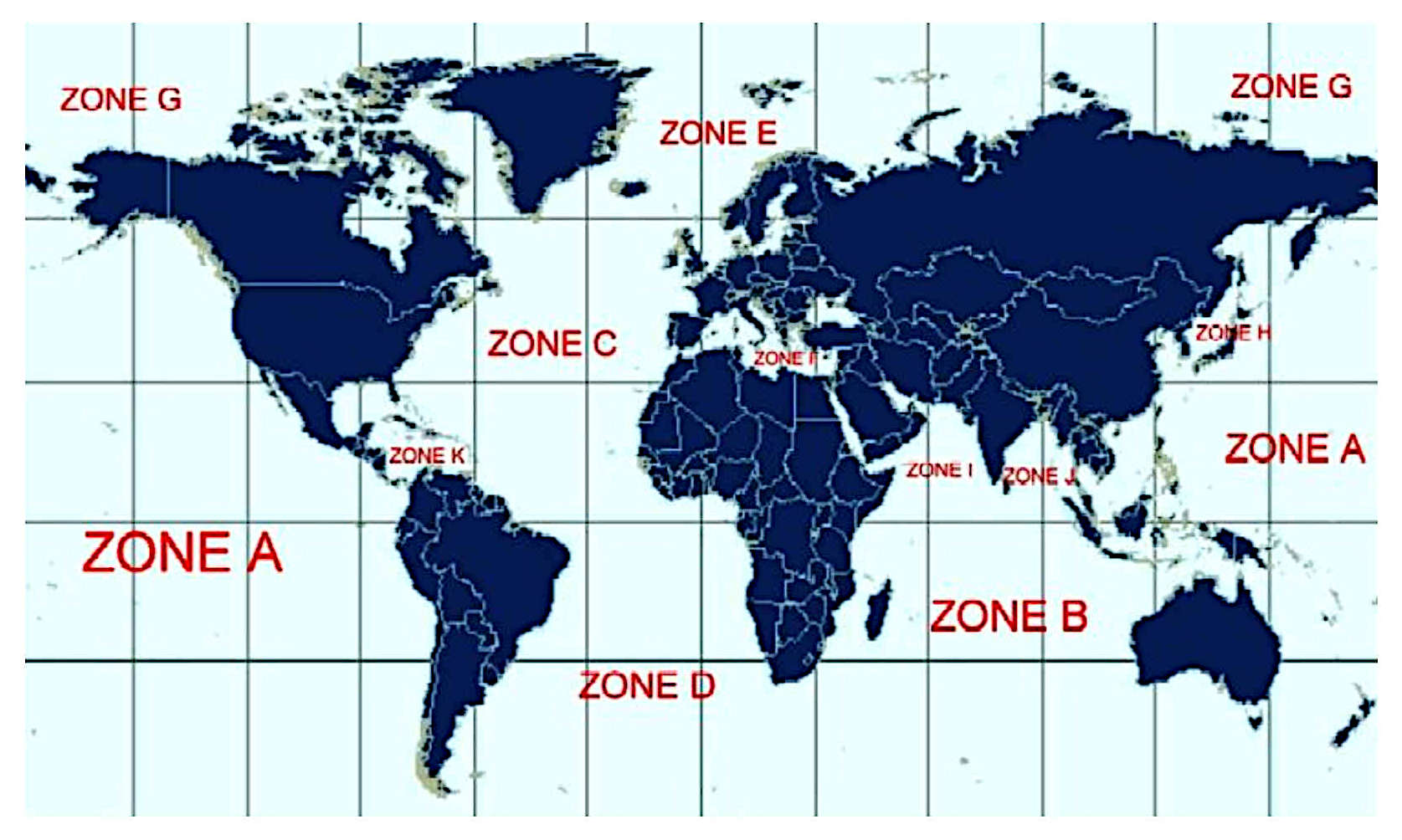

As

long ago as 2013, ocean going drones were predicted as replacements to

submarines, destroyers and conventional aircraft carriers: SeaWolf ZCC and

the Scorpion HK seen above, armed with SAMs,

Tomahawks

and Mk

48 torpedoes.

The British government were well aware of such project and associated

patent application, but actively blocked development to keep their

military contractors well fed with fat contracts for all the usual

suspects of procurement fraud - something built into defence budgets -

would you believe. We needed a conflict like Russia Vs Ukraine to accelerate

innovative robotic warfare, where results matter, not inflated opinions

and kleptocratic agendas. Suitably armed and armoured drone soldiers hold

the to potential to secure lasting peace. Especially to curb the rapidly

expanding Chinese Navy - which like their Grand building boom, will then

be another nail in the coffin of Xi

Jinping's absurd world domination agenda, that is causing economic

issues, much as Vladimir

Putin is now experiencing, for taking a punt at Volodymyr

Zelenskyy. Assuming NATO wises up quickly enough to parry China's

bullying in the East

and South

China seas.

THE

NATION 23 FEBRUARY 2024 - THE KILLER ROBOTS ARE HERE. IT'S TIME TO BE

WORRIED

When the leading advocates of autonomous weaponry tell us to be concerned about the unintended dangers posed by their use in battle, the rest of us should be worried.

Yes, it’s already time to be worried - very worried. As the wars in Ukraine and Gaza have shown, the earliest drone equivalents of “killer robots” have made it onto the battlefield and proved to be devastating weapons. But at least they remain largely under human control. Imagine, for a moment, a world of war in which those aerial drones (or their ground and sea equivalents) controlled us, rather than vice versa. Then we would be on a destructively different planet in a fashion that might seem almost unimaginable today. Sadly, though, it’s anything but unimaginable, given the work on artificial intelligence (AI) and robot weaponry that the major powers have already begun. Now, let me take you into that arcane world and try to envision what the future of warfare might mean for the rest of us.

By combining AI with advanced robotics, the US military and those of other advanced powers are already hard at work creating an array of self-guided “autonomous” weapons

systems - combat drones that can employ lethal force independently of any human officers meant to command them. Called “killer robots” by critics, such devices include a variety of uncrewed or “unmanned” planes, tanks, ships, and submarines capable of autonomous operation. The US Air Force, for example, is developing its “collaborative combat aircraft,” an unmanned aerial vehicle (UAV) intended to join piloted aircraft on high-risk missions. The Army is similarly testing a variety of autonomous unmanned ground vehicles (UGVs), while the Navy is experimenting with both unmanned surface vessels (USVs) and unmanned undersea vessels (UUVs, or drone submarines). China, Russia, Australia, and Israel are also working on such weaponry for the battlefields of the future.

The imminent appearance of those killing machines has generated concern and controversy globally, with some countries already seeking a total ban on them and others, including the United States, planning to authorize their use only under human-supervised conditions. In Geneva, a group of states has even sought to prohibit the deployment and use of fully autonomous weapons, citing a 1980 UN treaty, the Convention on Certain Conventional Weapons, that aims to curb or outlaw non-nuclear munitions believed to be especially harmful to civilians. Meanwhile, in New York, the UN General Assembly held its first discussion of autonomous weapons last October and is planning a full-scale review of the topic this coming fall.

For the most part, debate over the battlefield use of such devices hinges on whether they will be empowered to take human lives without human oversight. Many religious and civil society organizations argue that such systems will be unable to distinguish between combatants and civilians on the battlefield and so should be banned in order to protect noncombatants from death or injury, as is required by international humanitarian law. American officials, on the other hand, contend that such weaponry can be designed to operate perfectly well within legal constraints.

However, neither side in this debate has addressed the most potentially unnerving aspect of using them in battle: the likelihood that, sooner or later, they’ll be able to communicate with each other without human intervention and, being “intelligent,” will be able to come up with their own unscripted tactics for defeating an

enemy - or something else entirely. Such computer-driven groupthink, labeled “emergent behavior” by computer scientists, opens up a host of dangers not yet being considered by officials in Geneva, Washington, or at the UN.

For the time being, most of the autonomous weaponry being developed by the American military will be unmanned (or, as they sometimes say, “uninhabited”) versions of existing combat platforms and will be designed to operate in conjunction with their crewed counterparts. While they might also have some capacity to communicate with each other, they’ll be part of a “networked” combat team whose mission will be dictated and overseen by human commanders. The Collaborative Combat Aircraft, for instance, is expected to serve as a “loyal wingman” for the manned F-35 stealth fighter, while conducting high-risk missions in contested airspace. The Army and Navy have largely followed a similar trajectory in their approach to the development of autonomous weaponry.

THE APPEAL OF "ROBOT SWARMS"

However, some American strategists have championed an alternative approach to the use of autonomous weapons on future battlefields in which they would serve not as junior colleagues in human-led teams but as coequal members of self-directed robot swarms. Such formations would consist of scores or even hundreds of AI-enabled UAVs, USVs, or

UGVs - all able to communicate with one another, share data on changing battlefield conditions, and collectively alter their combat tactics as the group-mind deems necessary.

“Emerging robotic technologies will allow tomorrow’s forces to fight as a swarm, with greater mass, coordination, intelligence and speed than today’s networked forces,” predicted Paul Scharre, an early enthusiast of the concept, in a 2014 report for the Center for a New American Security (CNAS). “Networked, cooperative autonomous systems,” he wrote then, “will be capable of true swarming—cooperative behavior among distributed elements that gives rise to a coherent, intelligent whole.”

As Scharre made clear in his prophetic report, any full realization of the swarm concept would require the development of advanced algorithms that would enable autonomous combat systems to communicate with each other and “vote” on preferred modes of attack. This, he noted, would involve creating software capable of mimicking ants, bees, wolves, and other creatures that exhibit “swarm” behavior in nature. As Scharre put it, “Just like wolves in a pack present their enemy with an ever-shifting blur of threats from all directions, uninhabited vehicles that can coordinate maneuver and attack could be significantly more effective than uncoordinated systems operating en masse.”

In 2014, however, the technology needed to make such machine behavior possible was still in its infancy. To address that critical deficiency, the Department of Defense proceeded to fund research in the AI and robotics field, even as it also acquired such technology from private firms like Google and Microsoft. A key figure in that drive was Robert Work, a former colleague of Paul Scharre’s at CNAS and an early enthusiast of swarm warfare. Work served from 2014 to 2017 as deputy secretary of defense, a position that enabled him to steer ever-increasing sums of money to the development of high-tech weaponry, especially unmanned and autonomous systems.

FROM MOSAIC TO REPLICATOR

Much of this effort was delegated to the Defense Advanced Research Projects Agency (DARPA), the Pentagon’s in-house high-tech research organization. As part of a drive to develop AI for such collaborative swarm operations, DARPA initiated its “Mosaic” program, a series of projects intended to perfect the algorithms and other technologies needed to coordinate the activities of manned and unmanned combat systems in future high-intensity combat with Russia and/or China.

“Applying the great flexibility of the mosaic concept to warfare,” explained Dan Patt, deputy director of DARPA’s Strategic Technology Office, “lower-cost, less complex systems may be linked together in a vast number of ways to create desired, interwoven effects tailored to any scenario. The individual parts of a mosaic are attritable [dispensable], but together are invaluable for how they contribute to the whole.”

This concept of warfare apparently undergirds the new “Replicator” strategy announced by Deputy Secretary of Defense Kathleen Hicks just last summer. “Replicator is meant to help us overcome [China’s] biggest advantage, which is mass. More ships. More missiles. More people,” she told arms industry officials last August. By deploying thousands of autonomous UAVs, USVs, UUVs, and UGVs, she suggested, the US military would be able to outwit, outmaneuver, and overpower China’s military, the People’s Liberation Army (PLA). “To stay ahead, we’re going to create a new state of the art.… We’ll counter the PLA’s mass with mass of our own, but ours will be harder to plan for, harder to hit, harder to beat.”

To obtain both the hardware and software needed to implement such an ambitious program, the Department of Defense is now seeking proposals from traditional defense contractors like Boeing and Raytheon as well as AI startups like Anduril and Shield AI. While large-scale devices like the Air Force’s Collaborative Combat Aircraft and the Navy’s Orca Extra-Large UUV may be included in this drive, the emphasis is on the rapid production of smaller, less complex systems like AeroVironment’s Switchblade attack drone, now used by Ukrainian troops to take out Russian tanks and armored vehicles behind enemy lines.

At the same time, the Pentagon is already calling on tech startups to develop the necessary software to facilitate communication and coordination among such disparate robotic units and their associated manned platforms. To facilitate this, the Air Force asked Congress for $50 million in its fiscal year 2024 budget to underwrite what it ominously enough calls Project VENOM, or “Viper Experimentation and Next-generation Operations Model.” Under VENOM, the Air Force will convert existing fighter aircraft into AI-governed UAVs and use them to test advanced autonomous software in multi-drone operations. The Army and Navy are testing similar systems.

WHEN SWARMS CHOOSE THEIR OWN PATH

In other words, it’s only a matter of time before the US military (and presumably China’s, Russia’s, and perhaps those of a few other powers) will be able to deploy swarms of autonomous weapons systems equipped with algorithms that allow them to communicate with each other and jointly choose novel, unpredictable combat maneuvers while in motion. Any participating robotic member of such swarms would be given a mission objective (“seek out and destroy all enemy radars and anti-aircraft missile batteries located within these [specified] geographical coordinates”) but not be given precise instructions on how to do so. That would allow them to select their own battle tactics in consultation with one another. If the limited test data we have is anything to go by, this could mean employing highly unconventional tactics never conceived for (and impossible to replicate by) human pilots and commanders.

The propensity for such interconnected AI systems to engage in novel, unplanned outcomes is what computer experts call “emergent behavior.” As ScienceDirect, a digest of scientific journals, explains it, “An emergent behavior can be described as a process whereby larger patterns arise through interactions among smaller or simpler entities that themselves do not exhibit such properties.” In military terms, this means that a swarm of autonomous weapons might jointly elect to adopt combat tactics none of the individual devices were programmed to

perform - possibly achieving astounding results on the battlefield, but also conceivably engaging in escalatory acts unintended and unforeseen by their human commanders, including the destruction of critical civilian infrastructure or communications facilities used for nuclear as well as conventional operations.

At this point, of course, it’s almost impossible to predict what an alien group-mind might choose to do if armed with multiple weapons and cut off from human oversight. Supposedly, such systems would be outfitted with failsafe mechanisms requiring that they return to base if communications with their human supervisors were lost, whether due to enemy jamming or for any other reason. Who knows, however, how such thinking machines would function in demanding real-world conditions or if, in fact, the group-mind would prove capable of overriding such directives and striking out on its own.

What then? Might they choose to keep fighting beyond their preprogrammed limits, provoking unintended escalation—even, conceivably, of a nuclear kind? Or would they choose to stop their attacks on enemy forces and instead interfere with the operations of friendly ones, perhaps firing on and devastating them (as Skynet does in the classic science fiction Terminator movie series)? Or might they engage in behaviors that, for better or infinitely worse, are entirely beyond our imagination?

Top US military and diplomatic officials insist that AI can indeed be used without incurring such future risks and that this country will only employ devices that incorporate thoroughly adequate safeguards against any future dangerous misbehavior. That is, in fact, the essential point made in the “Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy” issued by the State Department in February 2023. Many prominent security and technology officials are, however, all too aware of the potential risks of emergent behavior in future robotic weaponry and continue to issue warnings against the rapid utilization of AI in warfare.

Of particular note is the final report that the National Security Commission on Artificial Intelligence issued in February 2021. Cochaired by Robert Work (back at CNAS after his stint at the Pentagon) and Eric Schmidt, former CEO of Google, the commission recommended the rapid utilization of AI by the US military to ensure victory in any future conflict with China and/or Russia. However, it also voiced concern about the potential dangers of robot-saturated battlefields.

“The unchecked global use of such systems potentially risks unintended conflict escalation and crisis instability,” the report noted. This could occur for a number of reasons, including “because of challenging and untested complexities of interaction between AI-enabled and autonomous weapon systems [that is, emergent behaviors] on the battlefield.” Given that danger, it concluded, “countries must take actions which focus on reducing risks associated with AI-enabled and autonomous weapon systems.”

When the leading advocates of autonomous weaponry tell us to be concerned about the unintended dangers posed by their use in battle, the rest of us should be worried indeed. Even if we lack the mathematical skills to understand emergent behavior in AI, it should be obvious that humanity could face a significant risk to its existence, should killing machines acquire the ability to think on their own. Perhaps they would surprise everyone and decide to take on the role of international peacekeepers, but given that they’re being designed to fight and kill, it’s far more probable that they might simply choose to carry out those instructions in an independent and extreme fashion.

If so, there could be no one around to put an RIP on humanity’s gravestone.

LINKS

& REFERENCE

https://www.thenation.com/article/world/killer-robots-drone-warfare/

https://www.reuters.com/investigates/special-report/us-china-tech-drones/

ARDUINO

- ARM

HOLDINGS - BEAGLEBOARD

- MBED

- PCBS - PICAXE

- RASPBERRY

PI

|